Can Crowdsourcing Lead to Artificial Intelligence Tools for Radiation Oncologists?

A worldwide crowd innovation contest incentivized international programmers to create automated AI algorithms for an area of radiation oncology.

A worldwide crowd innovation contest incentivized international programmers to create automated artificial intelligence (AI) algorithms for an area of radiation oncology, a study

“It’s very timely,”

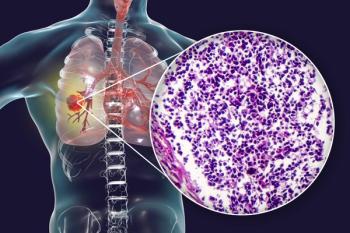

Given the lack of radiation oncology expertise globally and the need to address that demand, the study researchers created a contest in which programmers from around the world would develop AI algorithms that could replicate a very time-consuming and manual task performed by radiation oncologists: segmenting lung tumors for radiation therapy targeting. The contest was designed to last 10 weeks, take place online, be carried out across three phases, and include financial prizes, which totaled to $55,000.

To train and test their algorithms, contestants were supplied with a lung tumor data set that had been segmented by a single radiation oncologist. Contestants also received real-time feedback during each phase about the performance of their algorithm, allowing them to modify to improve accuracy. Contestants submitted their algorithms at the end of each phase and prizes were awarded to top performers.

Overall, 34 of the 564 contestants (6%) who registered for the challenge submitted 45 algorithms across the three phases, with the pool shrinking as the phases progressed. Phase 1 had 29 algorithms, phase 2 had 11, and phase 3 had 5.

The task of lung segmentation for radiotherapy is known to have considerable interobserver variability. Using the interobserver variability seen among six experts as a benchmark, the top performing algorithm from phase 3 successfully performed within the interobserver variability range.

However, although algorithms were indeed generated using this crowd-based approach, ultimately none can be used in their current form to expand radiation oncology expertise to areas of the world that lack radiation oncologists, which was the overarching goal of the study. In part, this is because the algorithms were trained using a data set from a single radiation oncologist and may contain biases.

“They spent approximately $50,000 to do this. It’s not a huge amount of money, but it's not a sneeze either,” said Thompson. By comparison, the 2017 Kaggle Bowl, a project that crowdsourced AI algorithms for early lung cancer detection, awarded $1 million in prizes to contestants. For the current crowdsourcing effort, the relatively low-cost design made the approach more sustainable and scalable than other efforts, but ultimately the end result was a “suboptimal product” that can’t be used, he said.

Newsletter

Stay up to date on recent advances in the multidisciplinary approach to cancer.